AI & Robochronicles: August 2020

Software Focus shares a compilation of recent artificial intelligence (AI) projects that are gaining steam and making a difference across industries and niches.

Dystopian Future

Today, finding a person using their social accounts is easy. Clearview AI is a startup that allows law enforcement to directly navigate from a suspect’s photo to their social account.

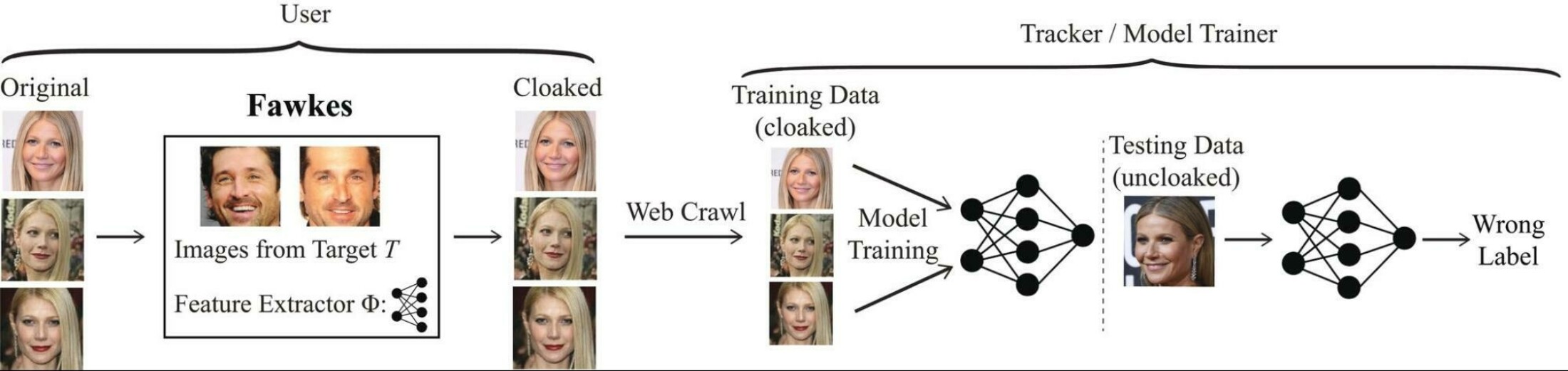

A team of engineers at the University of Chicago tried to bypass the system and developed a service that masks photographs from facial recognition algorithms. They built an application called Anonymous Mask – Cool Guy Fawkes that transforms an image at the pixel level changing particular facial features.

The app became available to software developers about a month ago and has already been downloaded more than 50 thousand times.

During testing, engineers were able to bypass facial recognition systems from Amazon, Microsoft and Chinese technology company Megvii.

iPhone as a Webcam

NeuralCam Live is an app that turns Apple smartphones into smart webcams. The iPhone camera + machine learning can deliver much better video quality than a regular webcam. After installing the iOS app and the Mac driver, the iPhone transmits the processed video stream to the computer in real-time. In the near future, the developers promise to launch a desktop version for Windows.

Video processing takes place directly on the device, so the company is developing an iOS SDK to enable third-party applications to make “improvements” in real-time: for example, even out the complexion, apply filters or increase the brightness as needed.

The application is able to evaluate the current lighting conditions and based on this, make the image better. NeuralCam Live can also instantly blur the image from the camera if it detects, for example, a naked body. NeuralCam Live is free to download – almost all of its core features are available to users.

The app supports Zoom, Google Meet, and Microsoft Teams, but doesn’t work with Apple’s FaceTime or Safari on Mac. However, you can use Chrome, Firefox, and any conferencing application that supports virtual webcams.

Researchers at McAfee have discovered a potential vulnerability in existing facial recognition algorithms.

To do this, they photographed employees, from different angles, with different facial expressions, and under different lighting conditions. About 1,500 photos of each of the participants were taken. The images were uploaded to the open-source neural network FaceNet, and then, using CycleGAN and StyleGAN, they replaced the face of one participant with the image of another. And after that, the recognition algorithms took the generated image for the original.

Using a similar trick, attackers can bypass any face recognition system. For example, when going through automatic passport control. To do this, it is enough to upload a photo of another person into the passport chip. Of course, this will not work if the passport is also checked by a security officer. But this is exactly what the researchers wanted to point out: do not rely entirely on vulnerable automatic identification or user verification systems.

Neural Network As a Blogger

Can AI blog about our day-to-day better than us?

One Berkeley student Liam Porr has been promoting a blog that was run by the GPT-3 neural network from OpenAI.

As a result, he got sixty subscribers to the blog and more than 26,000 visitors in just two weeks. Of all those readers, only a couple suspected the blogs were written by AI. Here is a collection of GPT-3 use cases: from a recipe generator to a search engine.

Scientists Show How Computers Fantasize

MIT scientists have released a creativity test for neural networks, more specifically, for GANs (Generative Adversarial Networks). This is an unsupervised machine learning algorithm in which two neural networks “compete” with each other: one generates samples (generator), and the other tries to distinguish correct samples from incorrect ones (discriminator).

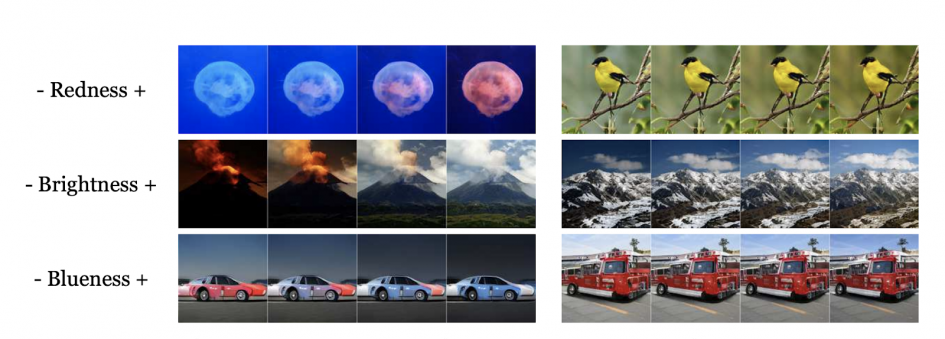

The scientists took the GANs, which had already examined 14 million photographs from ImageNet, “pointed” the models at certain elements of the images and asked them to depict these objects in close-up, in bright light, deployed in space or painted in different colors. The aim of the study was to understand whether neural networks can represent our three-dimensional world in all its diversity.

Results

According to scientists, such experiments will help, among other things, figure out what creative techniques people use to capture reality in front of the lens.

For example, the neural network was able to present a red balloon from different angles, but it failed to deploy a pizza.

Reducing the zoom turned the cat into a puddle of wool, but the robin remained sharp regardless of the distance. The model easily repainted the car in blue and the jellyfish in red but refused to represent the fire engine and the goldfinch in any other colors than the standard ones. When the mountain landscape was darkened, the model added a volcanic eruption – as if it understood that this was the only source of light in the dark.

Despite the fact that neural network algorithms are becoming more complex, they still rely on data. Their creative process is based on the bias and stereotypes of thousands of photographers about what angles and themes they use and how they portray objects in front of the lens. So far, neural networks are not able to represent the physical world in conceptual diversity – unlike humans.

Stay tuned with Software Focus!