Google Neural Network Translates Web Pages Into Video

Google AI has demonstrated how a neural network translates web pages into videos.

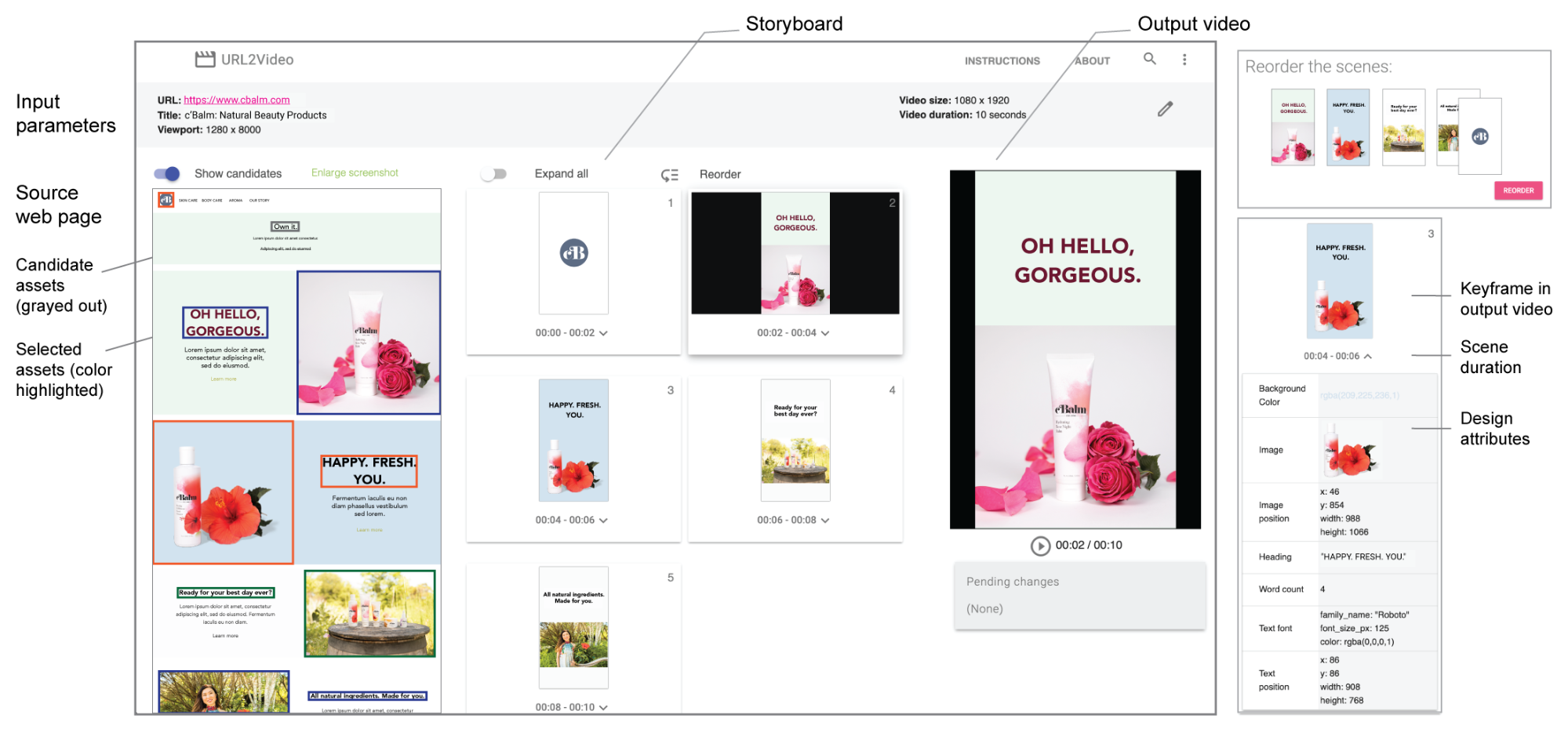

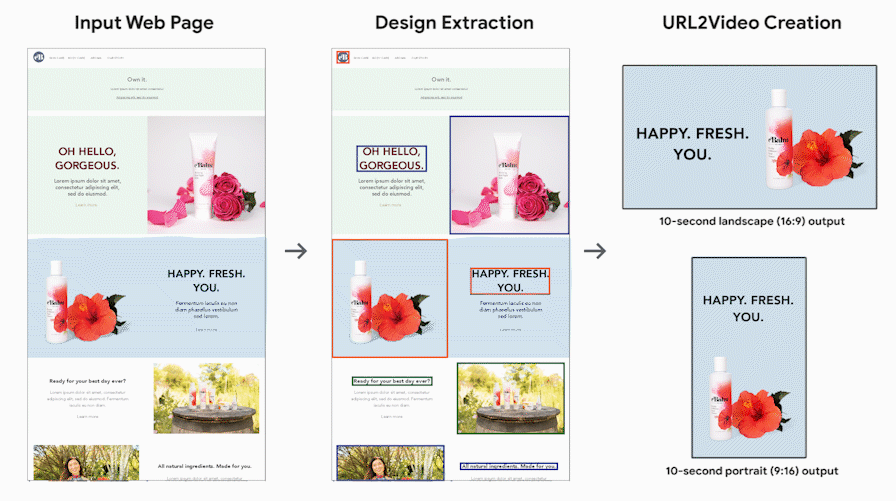

URL2Video, an automatic short video conversion pipeline, performs translation within the time and visual constraints of the content owner. The tool extracts resources (text, images, or video) and their design styles (including fonts, colors, graphic layouts, and hierarchy) from HTML sources and converts them into a sequence of snapshots on the original page. The user then sets the dimensions and duration of the clip, and the tool converts the extracted footage into the video.

The URL2Video pipeline determines the temporal and visual representation of each resource based on a set of heuristics obtained from interviews with designers. The heuristics they’ve developed cover common video editing styles, including content hierarchy, limiting the amount of information in a shot and its duration, ensuring consistent color and style for branding, and more.

URL2Video automatically limits the duration of each visual so that viewers can perceive the content. Thus, a short video highlights the most important information at the top of the page.

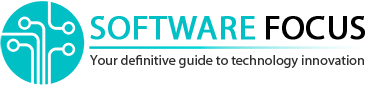

The research prototype interface allows the user to view the design attributes in each video extracted from the original page, reorder materials, change designs such as colors and fonts, and adjust constraints to create a new video.

The video below shows how URL2Video converts a page with several short video clips into a 12 second video output:

Researchers are working to incorporate soundtrack and voiceover into video production.

Stay tuned with Software Focus!